Automated pig visual sensemaking addresses human limitations

Results of the video data analyses showed that our new approach can recognize a set of standard behaviors at a mean average precision of 96%.

March 3, 2020

Pigs are subjected to a variety of stressors that include weaning, transportation, co-mingling and dietary changes that increase the likelihood of morbidity and mortality upon arrival to a nursery; those animals requiring treatment for clinical signs of morbidity shortly after nursery placement have decreased nursery performance and compromised welfare.

Many infectious agents (e.g., porcine reproductive and respiratory syndrome, porcine epidemic diarrhea virus and diarrhea) contribute to increased morbidity and mortality, antibiotic use and, ultimately, economic loss; PRRS alone has been estimated to cost the U.S. swine industry $650 million per year. Pigs are prey animals and therefore often try to hide visual signs of sickness or weakness from human observers, making detection of sick pigs as much an art as it is a science.

Sickness behavioral identification includes recognition of clinical signs such as huddling, shivering, dehydration, fever, anorexia, increased thirst, sleepiness, reduced grooming, altered physical appearance, diarrhea and uncoordinated body movements. There are two limitations among the current methods to identify sick animals: i) significant human labor is required to visually observe pigs multiple times per day; and ii) differences among observers in level of training, experience and follow-through result in inconsistent identification of sick pigs. More consistent, earlier identification of sick animals through use of automated visual sensing systems will lessen the industry need for antibiotic use by increasing animal responsiveness to earlier treatment, positively affecting both consumer acceptance of pork and producer profitability.

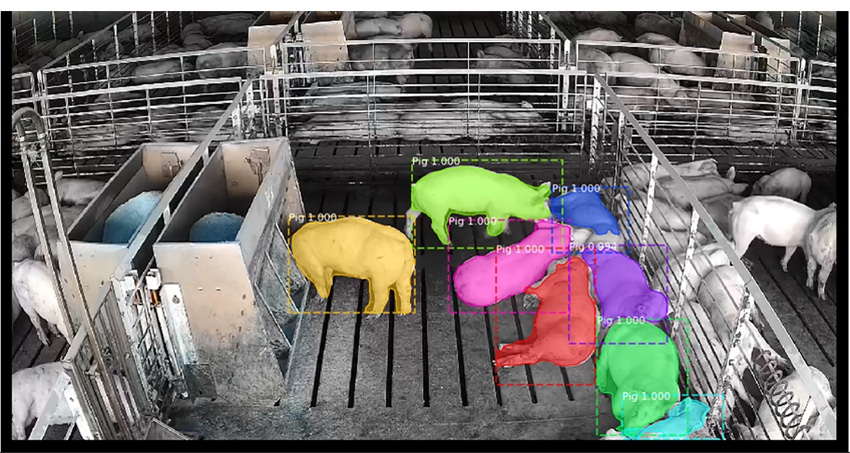

Our research team has created a suite of automated visual sense-making tools for individual pig behavior and health monitoring to address the human limitations of labor and consistency. The idea emerged from the critical need for new and innovative tools that expand precision livestock farming. Comparatively, row crop producers have aggressively implemented precision agriculture tools to improve yields and reduce costs, but the livestock industry has lagged behind the production agriculture community. Consistent, reliable and accurate detection of sick pigs is one of the biggest challenges faced by the swine industry today. The overarching goal of our research is to move from human observation to visual prediction of illness through recognition of pig behavior activity patterns (Figure 1).

Visual sensing is a non-invasive method that relies on a camera node (or array of cameras) to automatically detect, track and, ultimately, measure, usually through machine learning, one or more objects of interest, often at high frequency. Visual sensing tools have been developed for domains such as infrastructure, transportation and even human tracking. To date, this type of technology has not been developed for swine production. This approach is challenging due to pigs being similar in appearance and being in a confined space with uncertain environmental conditions. At present, the industry has few objective tools to identify sick animals, and these are often invasive, costly and susceptible to failure. Currently on-farm, we mainly rely on human caretakers to observe animals.

Visual sensemaking is consistent and flexible (unlike many existing PLF tools) and instead relies on low-cost visual sensing and activity recognition of individual animal characteristics in high fidelity, continuous situations. There are three main capability needs that our visual sensemaking tools address: i) an individual pig is recognized as distinct from its pen mates; ii) the behavior of that pig is distinguishable when alone and when with pen mates; and iii) remotely-sensed alterations in pig behavior are linked to health state (disease and growth). Because of the value in early identification of animals that display the early signs of infection, and the inability of current systems that monitor pigs only a couple hours per day, there is a clear and present need for highly accurate and consistent tools that leverage visual sensing and sensemaking.

Three pens (housing approximately 40 pigs per pen) were used to evaluate the visual sense-making tools over a 35-day period. Specific pig behaviors that have been evaluated with the visual sensing approach include resting, eating and drinking. Sickness behaviors extracted with the visual algorithms include reduced feeding, drinking and interactions with pen mates, and increased resting, huddling and shivering.

Animal health was assessed through behavioral observations and physiological changes; therefore, the visual precision tools designed, and the illness estimation models that will later be created, was and will be validated against human observation and decision-making. The results of the video data analyses showed that our new approach can recognize a set of standard behaviors (i.e.: standing, lying, eating, drinking) at a mean average precision of 96%, and that these data can be combined into individual animal behavior time series that were consistent with the human observations of the video, but also permitted automated continuous observation.

Sources: Joshua Peschel, Anna Johnson, Daniel Linhares, Chris Rademacher, Brett Ramirez and Jason Ross, who are solely responsible for the information provided, and wholly own the information. Informa Business Media and all its subsidiaries are not responsible for any of the content contained in this information asset.

You May Also Like